Classifying documents using Apache Mahout

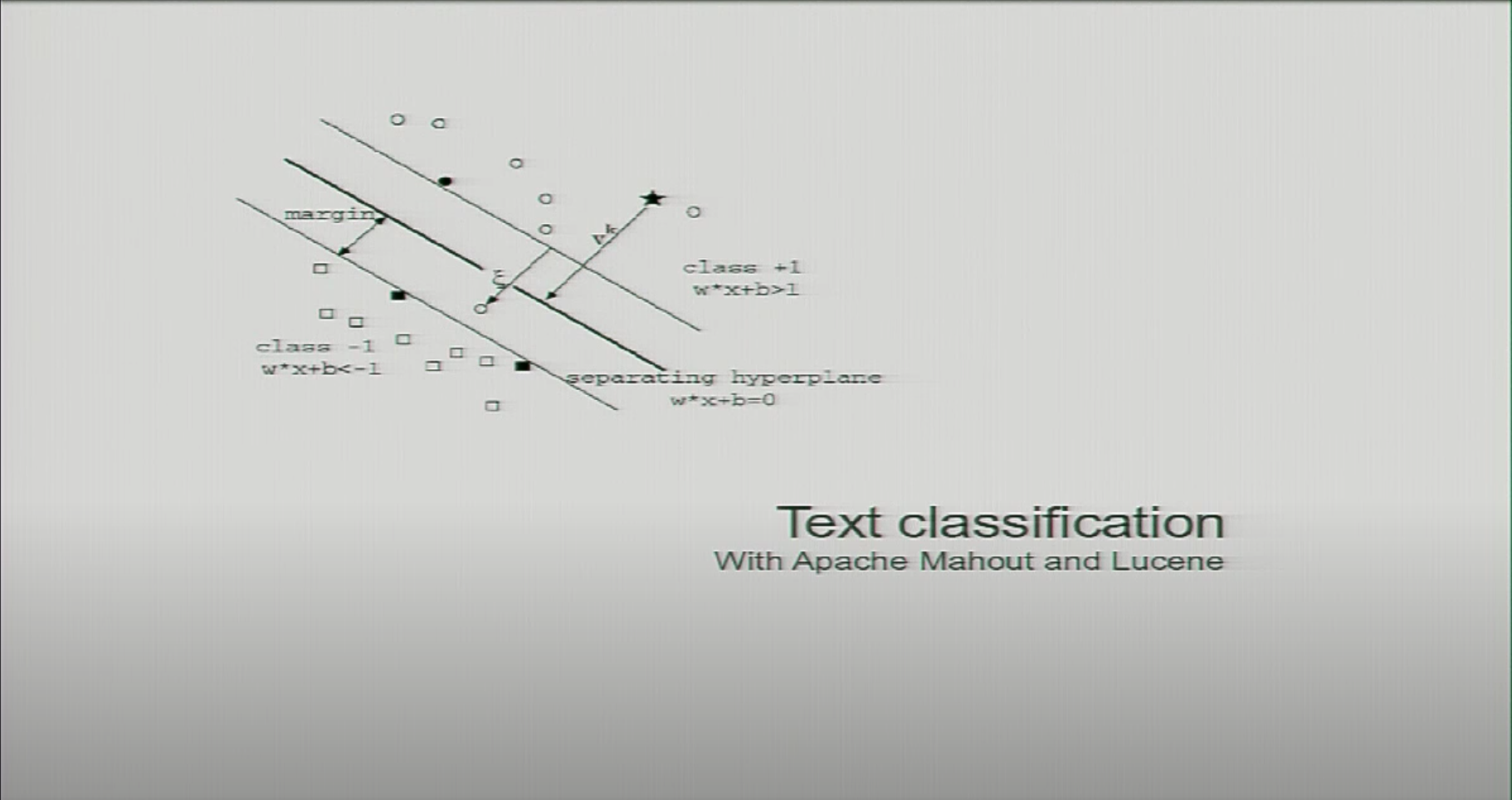

I wondered how to do some text classification with Java and Apache Mahout. Isabel Drost-Fromm gave a talk at the LuceneSolrRevolution Conference (Dublin – 2013) where she was speaking about the topic, of how Apache Mahout and Lucene could help you.

It is a good introduction to the topic. I enjoyed too much what was presented in the talk.

Lucene, Mahout, and Hadoop (only a little bit) sound really great for a talk about how to do text classifications.

The general idea behind the complete process to classify documents will follow the below steps:

HTML >> Apache Tika

Fulltext >> Lucene Analyzer

Tokenstream >> FeatureVectorEnconder

Vector >> Online Learner

Of course, Isabel was giving the advice of reusing the libraries that you have in your hands, taking an internal look at the algorithms used there, and improving them if you need them. As a first approach, it is really good for me to see how things work.

Mahout is a perfect library for machine learning, it was using map reduce to perfectly integrate with Hadoop (v1.0), although from April of 2014 they have decided to move forward:

The Mahout community decided to move its codebase onto modern data processing systems that offer a richer programming model and more efficient execution than Hadoop MapReduce. (You can read that in there web site).

At the end of the video, there is a recommendation to everyone to participate in the project: bug fixing, documentation, and reporting bugs. There are a lot of things to do in open-source projects always. If you are using the libraries there, I recommend you subscribe to the mailing lists if you are interested in the project.

I really recommend you to see the video if you have an interest in the field, she was giving a good talk about a good topic. You can take a look at the slides too.